Introduction/Overview of software

We worked with a program called Pix4Dmapper Pro- Educational. We used pictures that were previously taken with a UAV at South Middle School of the football field and surrounding track. "Pix4Dmapper software automatically converts images taken by hand, by drone, or by plane, and delivers highly precise, georeferenced 2D maps and 3D models" (https://pix4d.com/product/pix4dmapper-pro/).

To get started we first pulled up the Pix4Dmapper and created a new project. I named mine HoppsRa_southmiddletrack. We then simply saved it to our working folder within our own folder (HoppsRa). Next we added all the images we would be using, to do this we selected all 80 of the JPEGs from the southmiddletrack folder. Each of these pictures was geo tagged, meaning each one had its latitude, longitude and the height it was taken at. We accepted the parameters and checked initial processing and unchecked point cloud and mesh and also unchecked DSM, Orthomosaic and Index. The totol time to compute all of the data took 1 hour and 11 minutes. Once this once finished computing we unchecked initial processing, and then checked point cloud and mesh and checked DSM, Orthomosaic and Index so these could now compute and process. This same process was then done for the baseball images.

What is the overlap needed for Pix4D to process imagery?

- It is recommended that there is at least 75% frontal overlap (with respect to the flight direction), 60% side overlap (flying between tracks).

What if the user is flying over sand/snow, or uniform fields?

For sand/snow:

- Use a high overlap: At least 85% frontal overlap and at least 70% side overlap

- Set the exposure setting accordingly to get as much contrast as possible in each image.

- Increase the overlap between images to at least 85% frontal overlap and at least 70% side overlap.

- Fly higher. In most cases, flying higher improves the results.

- Have accurate image geolocation and use the Agriculture template.

What is Rapid Check?

- A different processing option, that is much faster but creates a sufficiently less accurate overall image. It reduces the resolution of the original image and therefor reduces the accuracy of the image.

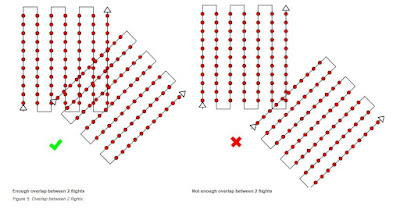

Can Pix4D process multiple flights? What does the pilot need to maintain if so?

- Yes it can process multiple flights but you need to make sure that:

- Each plan captures the images with enough overlap

- There is enough overlap between 2 image acquisition plans

- The different plans are taken as much as possible under the same conditions (sun direction, weather conditions, no new buildings, etc..)

- Yes this means that the camera axis is not perpendicular to the ground, if it were perpendicular to the ground this would be considered a vertical image.

- You need data that has high overlap

Figure 3: Showing the differences between vertical images and oblique images.

Are GCPs necessary for Pix4D? When are they highly recommended?

- GCPs are optional but recommended. They are highly recommended when processing a project with no image geolocation.

- If no Ground Control Points are used then:

- The final results have no scale, orientation, and absolute position information. Therefore they cannot be used for measurements, overlay and comparison with previous results

- They may produce an inverted 3D model in the rayCloud

- The 3D reconstruction may not preserve the shape of the surveyed area.

What is the Quality Report?

- The Quality Report is automatically displayed after each step of processing. It gives updates on how well the processing went and whether all images were able to be processed and if any any were rejected. It also shows a preview of what the finished product should be.

Figure 4: Quality Report of the football field after the initial processing

This small snippet of the Quality Report states that all images were calibrates (80 out of 80) and that none were rejected. And it also states that the time for initial processing was an hour and 10 minutes and 56 seconds.

Methods

Calculate the area of a surface within the Ray Cloud Editor. Export the feature for use in your maps.

- To calculate the area I clicked rayCloud and then clicked new surface. I simply clicked the area that I wanted to find the surface area of. Then I exported this data as a shape file to later be used in the maps I made.

Figure 5: Process of calculating the surface area of part of the baseball field

Figure 6: Process of calculating the surface area of part of the baseball field

Measure the length of a linear feature in the Ray Cloud. Export the feature for use in your maps.

- This was fairly similar to measure the area of a surface. I again clicked rayCloud and this time selected new polyline. I measured the length of the bleachers. I then exported this line feature as a shapefile to be used in my maps later on.

Figure 7: Measuring length of the bleachers using polyline tool

Figure 8: Measuring length of the bleachers using the polyline tool.

Calculate the volume of a 3D object. Export the feature for use in your maps.

- This tool required a little more work. I measured the volume of the dug out of the baseball field. Once I had placed the vertices it was required to press the button "Update Measurements" so the math could then be done to find the volume of the dug out. Here are the results below.

Figure 9: Results for finding volume of baseball dugout

Figure 10: Finding the volume of the dug out and where I placed the vertices.

Figure 11: Video of baseball field animation

Above (Figure 11) is the video I created showing a 3D view of the baseball field. It may be slow to work at first but it should play. It takes you through different views of the baseball field as if you are seeing it from the drone's perspective.

Results/Maps

Figure 12: Map of Baseball Field

This map shows all of the images put together to create this one image. With this created I could then take measurements of surface area and volume.

Figure:13 Map of South Middle School Track

After all of this I realized how much the Pix4DMapper program can do and I didn't even begin to use most of its capabilities. The program allows the user to make many other maps that can be used for many other applications. The only unfortunate part about this program is that the computing time takes hours for these images to load onto the computer.

Sources

- https://support.pix4d.com/hc/en-us/articles/202557459-Step-1-Before-Starting-a-Project-1-Designing-the-Image-Acquisition-Plan-a-Selecting-the-Image-Acquisition-Plan-Type#gsc.tab=0

- Photos for maps came from Drone

- All other screenshots were taken by myself while working with the data